Introduction

It’s a hot question what server providing service to use nowadays. The industry has boomed. The only thing that matters, though, is the reliability of a provider. With reliable I mean, the continuity of the service with no interruptions, the mutual understanding that problems can happen, and the urgency to correct problems to continue operation with minimal down-time. Ten years ago, myLoc was a good company. Nowadays, they’re so cheap and bad I’m leaving them. I guess inflation didn’t just inflate the currency.

Why write this in a blog post?

Because people on the internet have become so un-empathetic, robotic, and exhausting to talk to or discuss with, and my blog will minimize that because no one cares about my blog. Maybe it’s a diary at this point. I can’t remember in the last few years when I posted something on social media, and didn’t get people licking the boot of corporations under the guise of “violating their terms” or whatever other dumb reason. As if common sense doesn’t have any value anymore. Trust isn’t of any value. I’m quite sure some genius out there will find a way to twist this story and make it entirely my fault, because humanity now evaluates moral decisions based on legality… what a joke we’ve become. Nowadays, you’re not allowed to be angry. You can’t be upset. You shouldn’t be frustrated. You can’t do mistakes. If you express any of your feelings towards an experience; your expression, regardless of how reasonable it’s, will be called a rant and dismissed. So, I guess my blog is the best place to put this “rant”, if you will. Who cares after all?!

I guess this will have a better chance to reach people than any robotic social media out there.

Story starts with bad internet connection

A few months ago I contacted ServDiscount (a subsidiary of myLoc, where my server is, IIUC) about constant internet connection problems (ticket 854255-700134-52). The internet connection problems started like a year ago, but recently got so bad (while my home’s internet is around 1 Gbps). The problem was illustrated in many ways

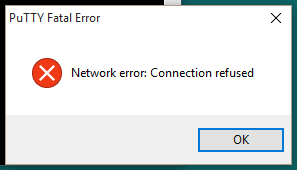

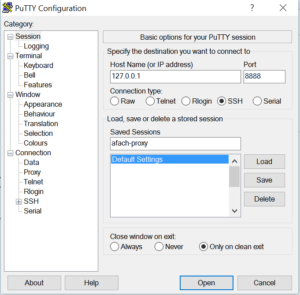

- File copying through sftp/ssh, was almost impossible. The speed throttles continuously and the download stops.

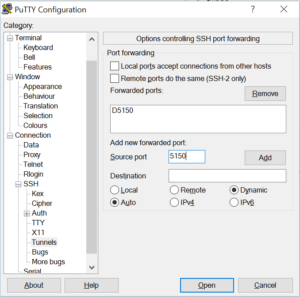

- If I use any ssh tunnel, the terminal will not be usable anymore while the tunnel is doing any transfers, even small data was enough to block it

- http(s) downloads of large files have become impossible; a simple attempt to download a file of 400 MB can’t be accomplished.

- Games with server software running on my server there could never be continued due to connection loss

- ftps downloads constantly show “connection reset by peer”, which I believe is the problem with everything else. The server-side connection was just resetting all the time.

As a patient and busy person, I didn’t care. This went on for MONTHS. I’m not exaggerating. It was when this problem went out of control. Then, under the assumption that “it’s my fault”, I rented a specific VPS from ServDiscount, and guess what? Same problems. The VPS had NOTHING but a game server in it (plus iptables firewall with blocked input policy + open some ports), yet it was impossible to finish a game or two. People from all around the world would get disconnected after a while.

Bad support, all the way (starting October)

When I contacted support about this connection problem, the first blind response was “it’s my fault”. Because for this (bad) company, it can never be their fault. All their configuration is perfect. Everything they do is perfect. They can never do mistakes. That’s what pissed me off. They couldn’t explain the disconnects in the gaming server OR my original server. But of course, they claimed it might be a “botnet”… because why not? Since it’s virtually impossible to deny the presence of botnet except with plausible deniability, they can never lose this argument. Yet with the presence of the second server, it was easy to prove that something is wrong there. It was easy to reproduce the problem, since an http download would fail. That was ready to view, test and debug. So, after that pressure, some guy in their support said “I’ll take a look”, and asked for access. I took the risk and created an account for them with sudoers power and added their public key, but they never logged in. It was easily observable in the logs, for weeks. Just empty promises with zero accountability.

After nagging them, they said “they’re busy”… great support. As if I’m asking for a favor or something.

Then I got exhausted, and to appease them, I decided to wipe my server. I wish it were that easy.

Get your backups, if you can

Because of these internet issues, my backups were trapped on that server. I’ve tried to download them in many ways, but my backup’s server software were constantly resetting the connection. Keep in mind that the software was used for years in the past. It’s not like I invented these solutions recently or something. In fact, my only “invention”, because of these problems, was to make these scripts run multiple times a day… just in an attempt to get the downloads to happen. For over a month, I couldn’t download any backups. My backup logs were flooded with “connection reset by peer” errors. What the hell am I supposed to do now?

Well, I remained patient. It was a mistake. I should’ve probably escalated and gotten angry? But I don’t think they would’ve done anything. When it’s a bad company, it’s a bad company.

Docker, as a tool to easily move away from bad situations

I didn’t know too much about docker other than it’s a containerization service that I barely used. In fact I used LXD more because it’s modeled around preserving OSs, while docker is modeled around stateless preservation of data, where the software is renewed constantly, but the data is preserved.

The idea was simple: Instead of having to install all services on bare-metal and suffer when incompetent support treats me like this in migrations, I could just tar the containers with their data, move them somewhere else, and continue operation as if nothing happened. This way, I’ll save time I don’t really have, since I work now as a lead engineer and I can’t really actively solve the same problems like I did back then when I was in academia. Sad fact of life: The older you grow, the less time you have.

So, I started learning docker. Watched tutorials, read articles, how-to, and many other things. I built images, did many things, and things were going fine. I containerized almost all my services. But one service was the cause of all evil…

Containerizing email services… big mistake and lots learned

The problem with containerization is that often you’ll need a proxy to deliver your connections. You can setup your docker container to share the host’s network, but I find that ugly and defeats the isolation picture of docker. My email server was proxied through haproxy.

Unfortunately, because of proxying, and because of my lack of understanding of how that worked (because I was learning), I misconfigured my email proxy and all the incoming connections to the email server were seen as “internal” connections because the source was the proxy software from within the server’s network, which basically meant that all incoming email relay requests were accepted.

Our Nigerian friends and their prince were ready

Once that mistake was made, within a day, the server was being used as a relay for spam.

ServDiscount and their reaction

On 16.12.2022, because one email ended in a spam trap, the “UCEProtect” service flagged my server, and let’s try to guess what ServDiscount/myLoc did:

- Contacted me, informing me of the issue and asking to correct it

- Switching the server to rescue mode, so that I can investigate the issue through the logs

- Completely block access to my server with no way for me to access the logs to understand the problem

- Refuse to unblock the server (even in rescue mode) unless UCEProtect gives the stamp of approval

Yep, it’s 3 and 4.

It’s completely understandable to block my server. After all, it was sending spam. No question there. But the annoying part is that I only knew about this whole thing by coincidence, when I attempted access to my server and couldn’t. They only sent an email, which I didn’t receive because my email server was blocked. They didn’t attempt a phone-call, SMS or anything. They didn’t even open a ticket IN MY ACCOUNT WITH THEM!

You might wonder whether it can get any more careless, passive and negligent from their end. Yes, it can!

Remember that I couldn’t even download my backups because of their crappy internet connection, which they didn’t diagnose or help fix. So I’m literally now trapped with no email access, my data is locked (for who knows how long), and… it gets worse.

I contacted their customer support. What do you think the answer was?

- We understand. Let us switch your server to rescue mode so that you can understand what happened

- Remove your server from UCEProtect’s list (by paying them money) then come back

You guessed it. It’s no. 2.

And again, remember, I can’t even gain rescue access to my server. So until this point, I don’t even have an idea what happened.

I go to UCEProtect, pay them around $100 (it was a Friday). Then went back to customer support. Guess their response:

- Thank you for removing your server from the list. Here’s access back so that you can investigate the issue.

- We have zero communication with the “abuse department”, so wait for them to react… fill the form they sent you to the email that you can’t access.

You also guessed it. No. 2.

No amount of tickets, phone-calls, or begging helped. A robot would’ve understood better that I couldn’t access my email so I have no way of responding.

Whether it’s with bad policy or otherwise, they never help when you need help. They couldn’t care less. So what if your server was stopped for days or even weeks based on UCEProtect listing? We don’t care, and we won’t help you fix the problem.

DNS change

While support was completely ignoring me, it occurred to me that I could switch my email MX DNS record to another service and receive my emails. Thanks to how MTAs (mail transfer agents) work, they don’t give up so easily. On 17.12.2022, I got the email on another email provider, who I paid just to solve this problem, filled the form (without knowing why the issue happened, because again, how the hell will I know without access to my logs, even in rescue mode?!).

The holy abuse department is on the other side of the universe

So while I did everything they asked for. I filled the form, I removed my IP address from UCECrap, I didn’t get my server until days later. Because the abuse department is simply unreachable. There’s basically zero urgency to solve such a problem for a customer (who’s been with them for 10 years). Who gives a flying f**k if your server and work halted for days?! Not myLoc, apparently.

Let’s summarize

- Bad internet connection with no help, so I couldn’t even get my backups

- No urgency to solve a problem I obviously didn’t do deliberately, with zero value for being with them for 10 years

- No access to server in rescue mode or VPN, yet I have to explain how the outbreak happened without logs, who knows how

- Only contact through email, which was blocked, which they never addressed

- Even after fulfilling their conditions, no one cares. No one helped, because the “abuse department” is on the other side of the universe.

What’s the point of having a server with a company that treats its customers like that?

What’s the point when accountability is too much to ask?

I don’t know.

Conclusion

I’m not gonna sit here and claim that I’m perfect and none of this is my responsibility. But at least I expect common sense treatment for customers, urgency to help and reasonable way to access the server to see the logs, whether in rescue mode or VPN or whatever, and a reachable “abuse department” that doesn’t hold all the keys with no accountability to customers. All this, and add that I’ve been with them for a decade.

But basically, the lesson here is, if anything goes wrong with your server in myLoc, you can go f**k yourself. No one will help you. You don’t really matter to them. Unlike other companies that will simply put your server in rescue mode and ask you to fix the problem. MyLoc will simply block you. Maybe they’re taking a cut out of UCEProtect? I don’t know. But you can only wonder why a company will be that stupid. I’m out.

Have a great one.